Sine Function Approximation Neural Network Diagram

Free Printable Sine Function Approximation Neural Network Diagram

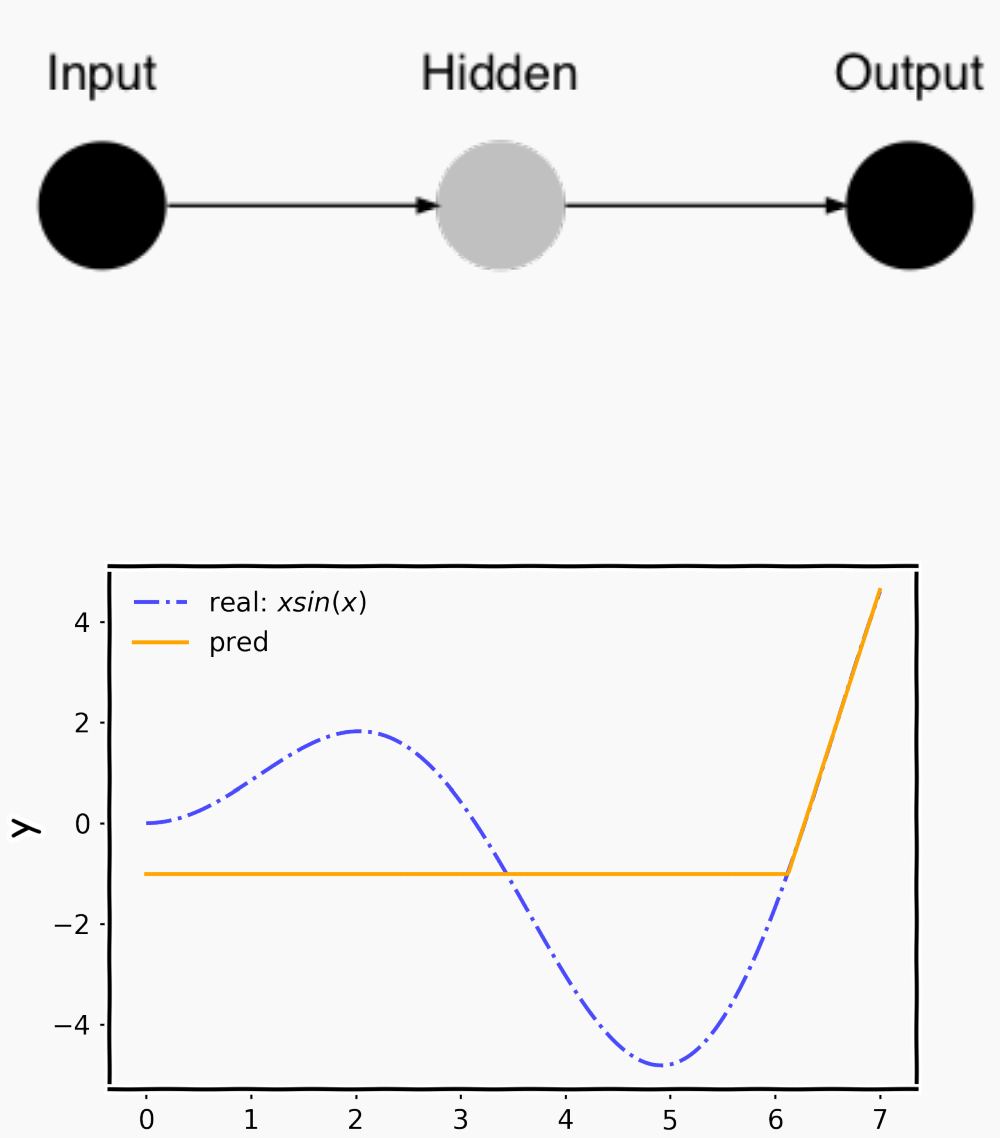

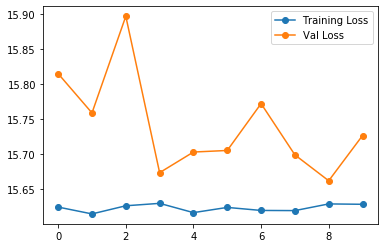

However i find that my model often converges on a state that has more local extremums than the data.

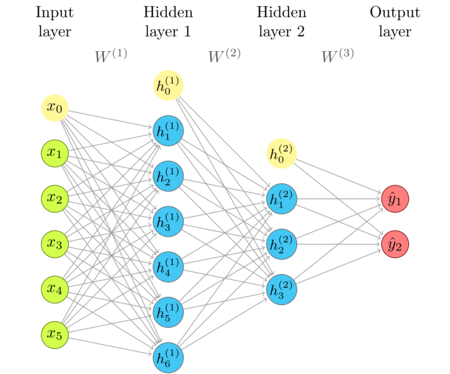

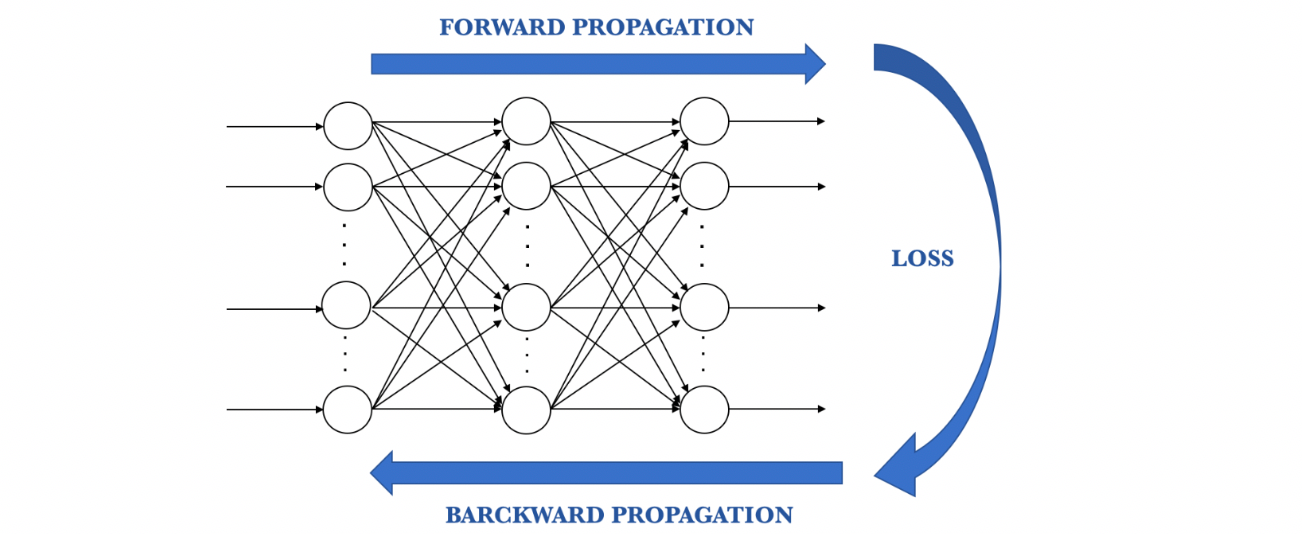

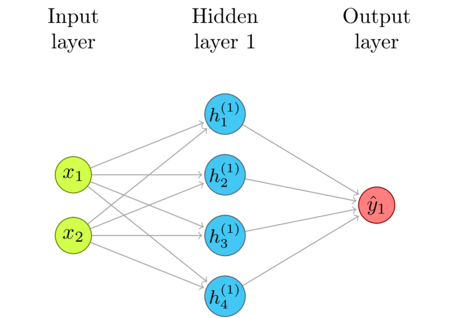

Sine function approximation neural network diagram. And you ll understand how the result relates to deep neural networks. Using four hidden neurons with sigmoid and an output layer with linear activation works fine. Many literatures have proposed and used tree form of neural networks 9 10 and 11. You ll understand why it s true that neural networks can compute any function.

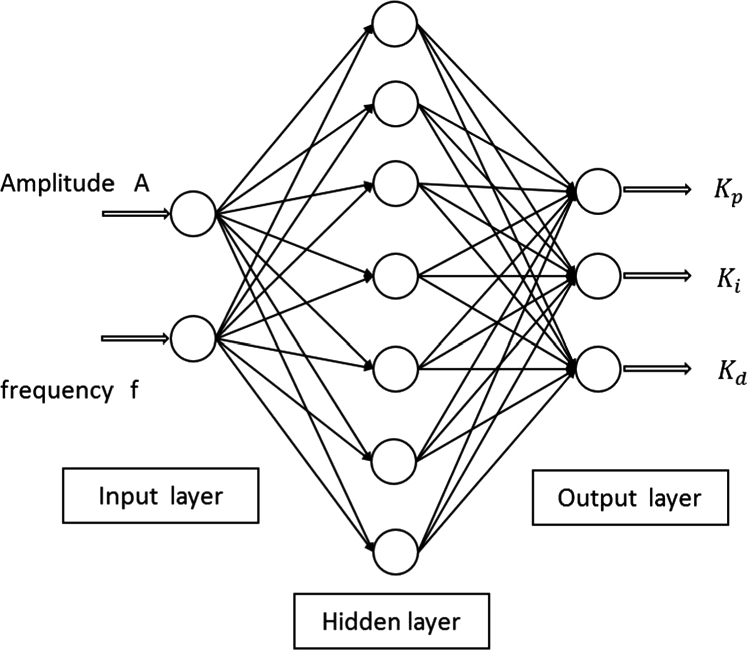

Here is my most recent model architecture. For learning purposes i have implemented a simple neural network framework which only supports multi layer perceptrons and simple backpropagation. The output of the. I am trying to approximate a sine function with a neural network keras.

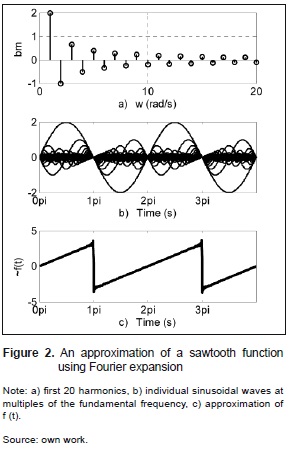

Its tree form of sine function and operation gets our neural network of trained data to build the node. Function approximation function approximation seeks to describe the behavior of very complicated functions by ensembles of simpler functions. We ll go step by step through the underlying ideas. We explore the phase diagram of approximation rates for deep neural networks.

Block diagram of supervised training algorithm 3. The functions are approximated by this applet inside the domain of open interval 0 1 due to the use of hard limiting functions in the neural network. This java applet approximates three given scalar valued functions of one variable using a three layer feedforward neural network. In computer algebra and symbolic computations a function can be represented as tree representation 12.

It works okay ish for linear classification and the usual xor problem but for sine function approximation the results are not that satisfying. But there are also settings that provide results that seem strange to me. I want to approximate a region of the sin function using a simple 1 3 layer neural network. 06 22 2019 by dmitry yarotsky et al.

Very important results have been established in this branch of mathematics. The phase diagram of approximation rates for deep neural networks. Skoltech 0 share. This is achieved by using trigonometric functions tensor products or power terms of the input.

In this chapter i give a simple and mostly visual explanation of the universality theorem. Here we will only name a few that bear a direct relation with our goal of better understanding neural networks. Stengel smooth function approximation using neural networks ieee transactions on neural networks vol. You ll understand some of the limitations of the result.

Yes i read the related posts link 1.